Event driven architectures in payments

Unlocking Agility and Efficiency: How Event-Driven Architectures Transform Financial Institutions

Welcome to The Engineer Banker, a weekly newsletter dedicated to organizing and delivering insightful technical content on the payments domain, making it easy for you to follow and learn at your own pace

Today, we're excited to present a comprehensive article that delves into the role of event-driven architectures in financial institutions, particularly in the complex ecosystem of banking. A bank is a multifaceted organization, with numerous functional departments such as compliance, risk management, customer service, and transaction processing, each serving as cogs in a well-oiled machine. Remarkably, the technical infrastructure of a bank often mirrors its organizational structure, an observation that aligns with Conway’s Law, which posits that system designs are bound to emulate the communication structures of the organizations that create them.

Understanding the intricacies of how these diverse departments and their corresponding technical systems communicate and interact with each other is key to grasping the agility and scalability required for modern banking. If you're new to the foundational elements of banking systems, we highly recommend that you read our introductory article on the anatomy of payments infrastructure. This will offer you a holistic view of the building blocks that form the underpinnings of a bank's operations.

In such a complex landscape, the need for efficient, robust, and scalable communication between these systems becomes apparent. This is where event-driven architectures come into play. This architectural style facilitates the decoupling of systems, allowing each to operate autonomously while still being part of an integrated whole. Through the use of events, services can publish, subscribe to, and consume data asynchronously, thereby enhancing operational efficiency and responsiveness.

For example, consider a payment processing unit in a bank. When a new transaction comes in, an event can be emitted to notify risk assessment, fraud detection, and customer notification services. These services can independently pick up the event, process it in their own specialized way, and then emit new events to signal completion or trigger additional actions. This way, each unit can operate autonomously, yet still contribute to a unified, streamlined operation.

By embracing event-driven architectures, financial institutions can not only improve internal efficiencies but also adapt more readily to changing business requirements and technological advancements. This contributes to an agile, resilient, and scalable operation capable of meeting the multifaceted demands of modern banking.

Event-Driven Architectures (EDA) have emerged as a crucial technological strategy to meet the agility, scalability, and real-time processing demands. Unlike traditional, request-response architectures that can become bottlenecks in the fast-paced world of transactions, EDAs offer a robust and flexible framework for handling a multitude of operations concurrently.

In an Event-Driven Architecture, the core idea is that changes in state, or events are captured in real-time and processed asynchronously. These events could range from a customer initiating a payment, a batch of transactions getting cleared, to fraud detection algorithms flagging suspicious activity. Each event is an atomic chunk of data that can be acted upon independently, thereby offering the opportunity for highly parallel and distributed processing.

Events flow through the system, being consumed, produced, and often transformed in the process. Each event carries significance and encapsulates specific data.

Let's consider a typical payment journey to understand how EDA could be applied. When a customer initiates a payment, an event is generated and placed on a message queue or broker. Various services can subscribe to these events, each responsible for a particular aspect of the transaction, such as authorization, risk assessment, fund reservation, and more. Because these services operate asynchronously, they can process the event at their convenience, ensuring that system resources are optimally utilized. Once processed, these services can generate additional events that other services can consume, creating a chain of operations linked together through events.

This architecture has several advantages in a payments context. First, it allows for highly decoupled systems. The service responsible for fraud detection, for instance, doesn't need to know anything about the service that handles currency conversion. This modularity makes the system easier to manage, test, and scale. Secondly, the asynchronous nature of EDA ensures that the system is highly responsive and can cope with variable loads, a must-have feature especially during peak transaction times like holidays or during specific market events.

What is an event?

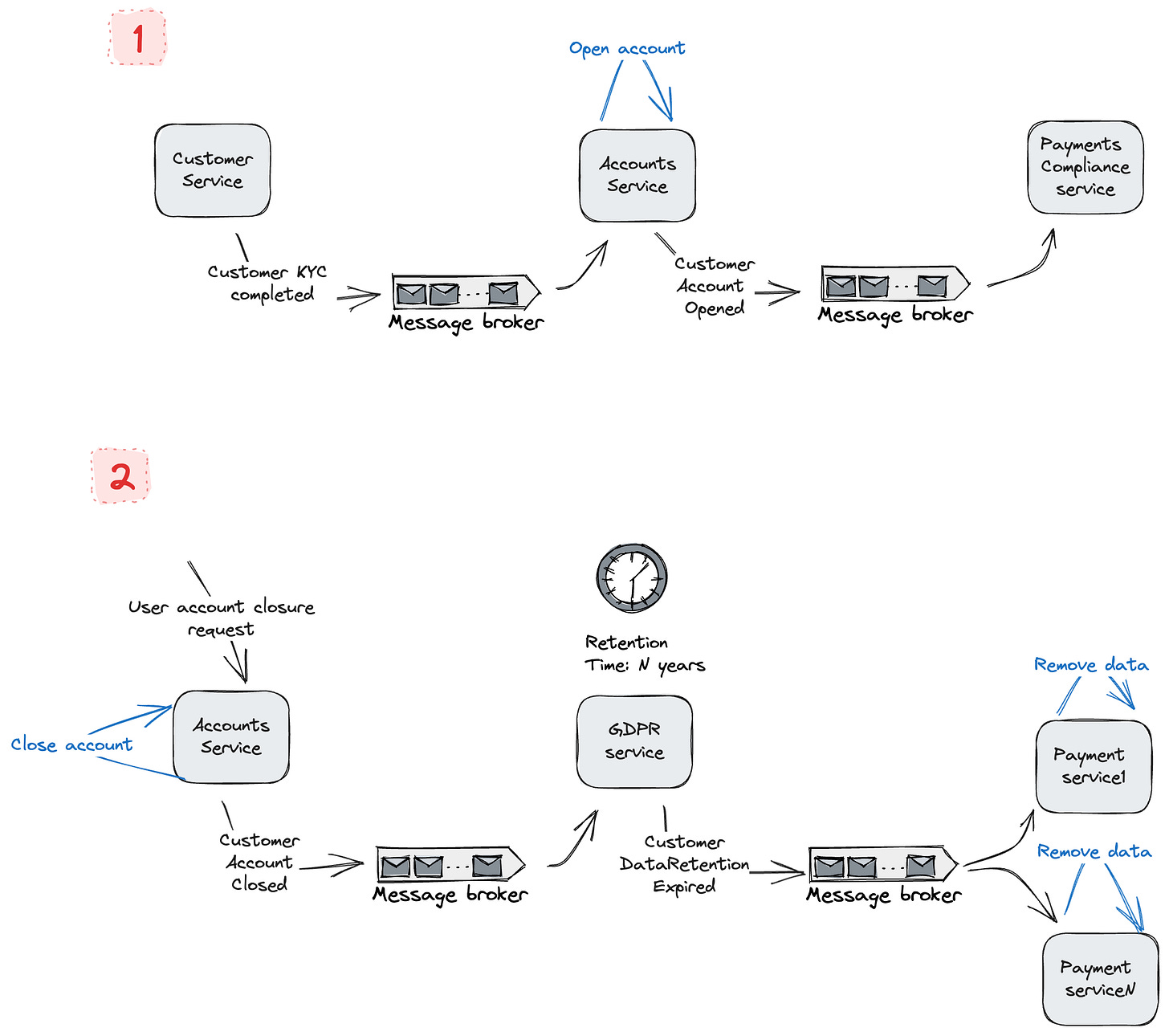

An event is a message that is sent as a reaction to something that has happened, typically a change in state of a resource in a particular system. For example, when you create an account in a bank, the account can transition through multiple states based on external actions that affect the state of the account. Below, you'll find two illustrative examples, among many possibilities, that demonstrate how events interconnect various business processes. The first example elucidates how payment functionality can be enabled for a specific customer following successful completion of Know Your Customer (KYC) procedures. When the customer finishes the KYC steps, the account status undergoes a modification. In response to this state change, the accounts service broadcasts a message to notify relevant systems about the updated account status.

In the second example, we explore a distinct scenario that demonstrates the importance of time as an actor in our systems, particularly in the context of compliance with General Data Protection Regulation (GDPR). Unlike traditional actors in financial systems such as customers, agents, and operations personnel, time can also be an actor affecting the state of specific records.

Let's consider the case where a customer decides to close their account with the financial institution. After the account is closed, the institution's GDPR compliance service schedules the removal of all the customer's personally identifiable information (PII) in accordance with GDPR requirements. This entails a predetermined retention period post-closure, during which the customer's data must be stored securely before it can be purged. The retention period varies based on the type of data and the specific requirements outlined in GDPR, but it serves a critical purpose, allowing for audits, dispute resolutions, or any other legal obligations that may require the institution to access historical data.

As the end of the retention period approaches, the GDPR service becomes an active participant in the system. When the retention deadline is reached, the service emits an event signaling to downstream services that the time for data removal has come. These downstream services, which could range from customer management systems to transaction histories, are then responsible for executing the data manipulation steps required to remove the customer's PII.

Now, what makes this event particularly critical is the stringent nature of GDPR penalties. Non-compliance can result in hefty fines, which makes it essential for all downstream services to respond promptly and effectively to the event emitted by the GDPR service. Moreover, failing to remove customer data post-retention could compromise the customer's privacy and leave the institution vulnerable to data breaches, thereby exacerbating both financial and reputational risks.

It's also worth noting that the GDPR service must ensure that the emitted event is secure, traceable, and logged for accountability. It must also be designed to trigger data removal from backups, logs, and any third-party services that the institution might be using. Hence, the GDPR service plays a vital role not only in automating the data removal process but also in ensuring that it is carried out in full compliance with GDPR standards.

Having explored various examples of events within event-driven architectures, it's time to delve into the categorization of these events. The types of events can be distinguished based on the volume of state and data they encapsulate, leading us to define distinct classifications for them.

Notifications

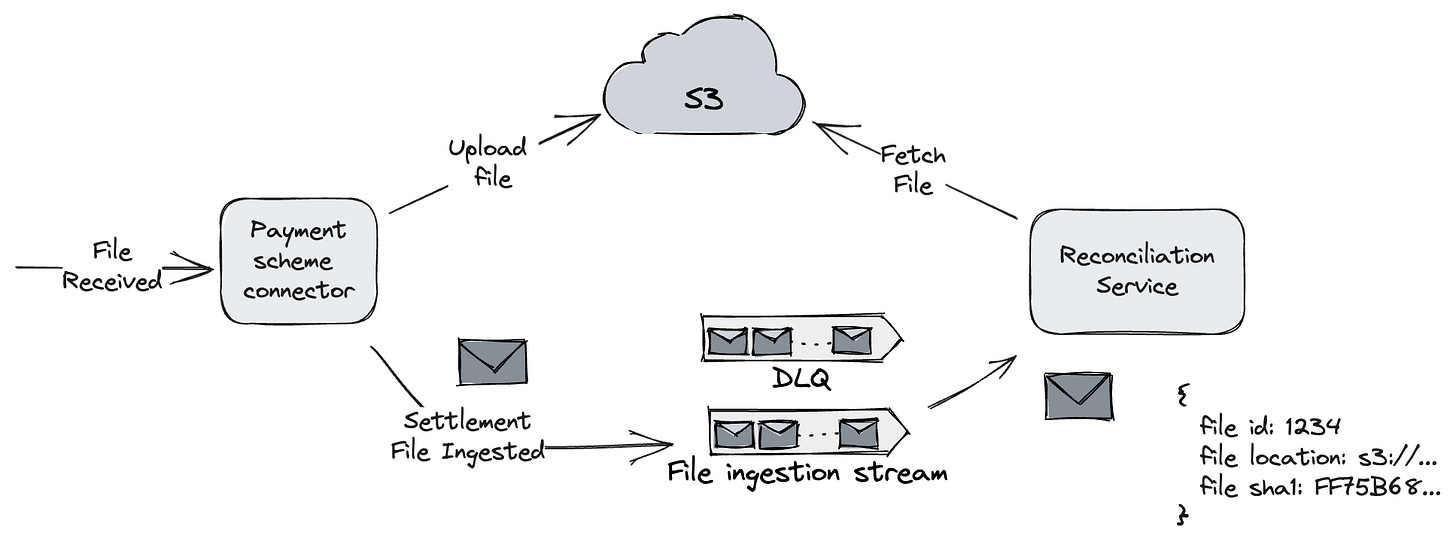

An event is said to have notification semantics when the event itself does not carry information about the resource or record that has been mutated. The event purely signals that something has happened and most of the time has a link for the consumer to fetch more information if needed. Those events go well when the only context needed for the receiving system is the acknowledgement that a particular action has happened. Additional scenarios where event notifications are particularly useful occur when the data payload is so large that it becomes impractical for queues and brokers to handle the entire event along with all its associated data. A prime example is the event generated when a file has been uploaded. In such cases, the notification related to this action will not carry the file itself, but rather a URL where the file can be downloaded. This allows downstream business processes to be triggered efficiently without overwhelming the system's data handling capabilities.

In this illustrative example, a dedicated service serves as the intermediary for connecting to the payment scheme. This service is tasked with downloading a settlement file that contains crucial payment-related information. Upon successful and secure downloading of the settlement file, the service uploads it to an Amazon S3 storage bucket for enhanced security and accessibility. It then initiates a notification event to alert the various consumer services that have registered an interest in the file's content. These consumer services, upon receiving the real-time notification, are provided with the necessary credentials or links to securely access and download the file from the S3 storage. This streamlined process not only ensures data integrity but also facilitates seamless and efficient distribution of critical financial information among the interested parties.

Among these interested consumers is a specialized service responsible for reconciliation. This reconciliation service takes the distributed settlement file and begins the process of parsing its entries, examines each entry to align it with the clearing information it has previously received from other data streams or channels.

Depending on the process that is run at the downstream service it may need context of the action that happened on the upstream service, for this reason some events carry state from the upstream to the downstream to minimize the number of calls to the producer, this pattern is called event-carried state transfer.

Depending in the amount of state that the upstream propagates we will talk about think or fat events.

Delta vs Snapshot Events

Depending on the volume of state information that an upstream service propagates downstream, events can be categorized as either thin or fat. In the context of event-driven architectures, a thin event typically carries minimal state information, often just enough to signal that a change has occurred. It may include identifiers or keys that allow the downstream service to fetch more details if needed. For example, a thin event might merely announce that a new transaction has been posted and provide a transaction ID for reference.

On the other hand, a fat event contains a more comprehensive set of data, capturing a richer snapshot of the state at the time of the event. This might include details like account balances, customer information, and transaction metadata, eliminating the need for a downstream service to make additional calls to gather more context. A fat event could be highly useful in scenarios requiring immediate, complex decision-making based on the event, such as fraud detection mechanisms that need to analyze multiple variables rapidly.

Choosing between thin and fat events often depends on various factors like network bandwidth, the complexity of downstream processing, and latency requirements, allowing for more tailored, efficient system-to-system communication.

A snapshot event serves as a particular subtype of fat events. A snapshot event captures the complete current state of a given object or system at a specific point in time. Unlike delta or incremental events, which only relay changes, snapshot events deliver a full data set. This allows downstream systems to either synchronize or initialize their states based on this comprehensive snapshot. While snapshot events grant complete visibility into the state of upstream systems, the onus is on those systems to track any changes between successive snapshots, calculate the differences or deltas, and accordingly update their state or trigger actions.

Serialization

Serialization of events is the process of converting the structured data of an event into a format that can be easily stored or transmitted, and later reconstructed. In the context of event-driven architectures, serialization allows events to be packaged into a format that can be sent across different services, systems, or network components. This format could be JSON, XML, Protobuf, Avro, or other serialization formats. Once the event reaches its destination, it is deserialized back into its original structure for further processing or analysis. Serialization ensures that complex data structures within the event are simplified into a format that can be easily shared and understood across different parts of a system.

In event-driven architectures, one of the pivotal considerations is the choice of serialization for events or messages that traverse the system. This is crucial for both performance and compatibility among the diverse services that may be consuming or producing events. Three popular serialization options are JSON, Protocol Buffers (protobuf), and Avro, each with its own set of advantages and disadvantages.

JSON (JavaScript Object Notation) is a text-based, human-readable format that's easy to understand and widely supported across programming languages. It's the go-to choice for many developers due to its simplicity and ease of use. However, the text-based nature of JSON can be a drawback in terms of size and speed, making it less efficient for transmitting large volumes of data or for real-time processing requirements commonly encountered in payments systems.

Protocol Buffers, or protobuf, is a binary serialization format developed by Google. Unlike JSON, protobuf messages are not self-describing, meaning they require a predefined schema to be interpreted. However, they offer significant advantages in terms of speed and size, making them highly efficient for transmitting large or complex datasets. Additionally, protobuf has built-in support for versioning, a crucial feature when dealing with evolving data structures in a distributed system.

Avro, developed by Apache, is another binary serialization format that is somewhat of a middle-ground between JSON and protobuf. Like protobuf, Avro is efficient in terms of both size and speed. Unlike protobuf, Avro schemas are typically sent along with the data, making Avro messages self-describing. This facilitates greater flexibility when evolving schemas but at the cost of slightly larger message sizes.

The choice of serialization in event-driven architectures is highly contextual. JSON is easy to use and widely supported but may not be the best choice for systems requiring high throughput or low latency. Protobuf offers efficiencies in size and speed but requires a predefined schema, making it less flexible for certain use-cases. Avro strikes a balance, offering both efficiency and flexibility, but may result in slightly larger messages due to the inclusion of schema information.

We have discussed event-driven architectures in financial environments. One final consideration to take into consideration when introducing EDAs into your technological stack is the architecture allows for easy adaptability and evolution. As business needs change, new services can be added or existing ones modified without disrupting the entire system. If a new regulation mandates additional checks before processing a payment, a new service can be introduced to handle that specific requirement, subscribed to the relevant events, and deployed—without a system-wide overhaul.

You may want to continue reading the rest of the event-driven architecture series: